Method

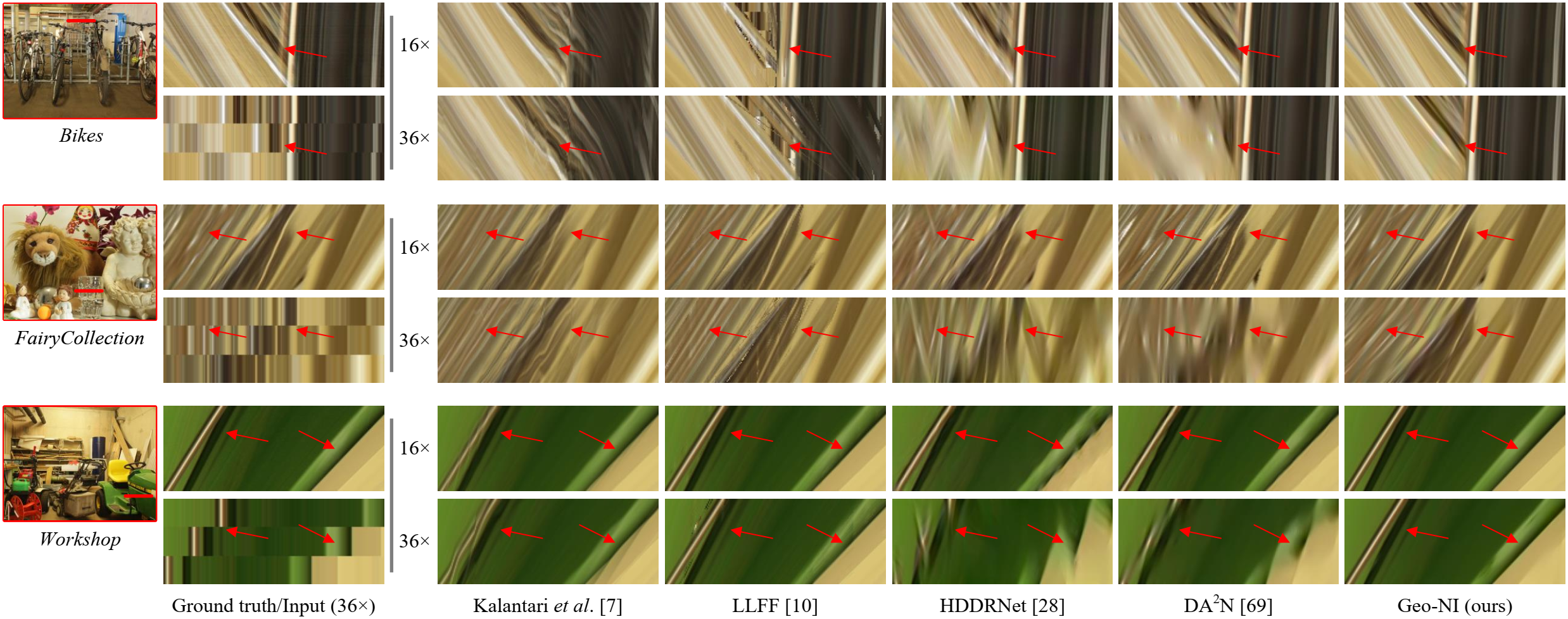

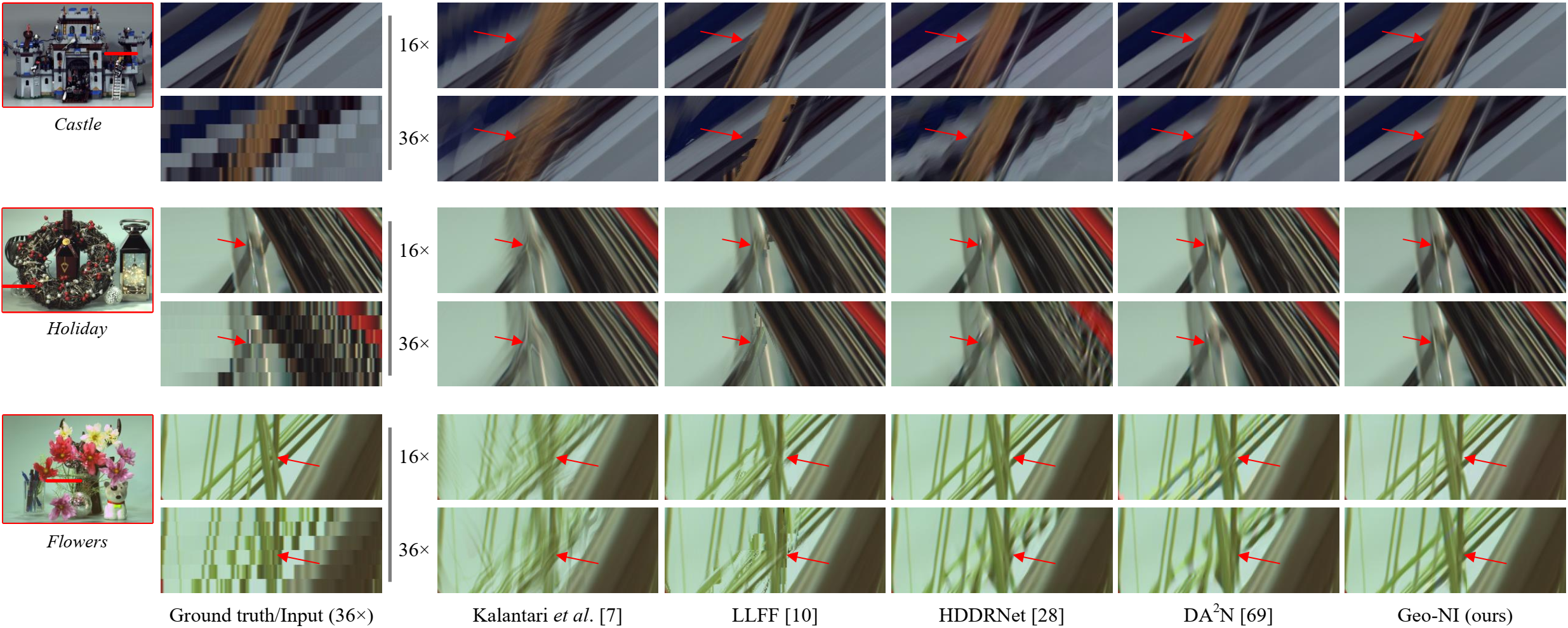

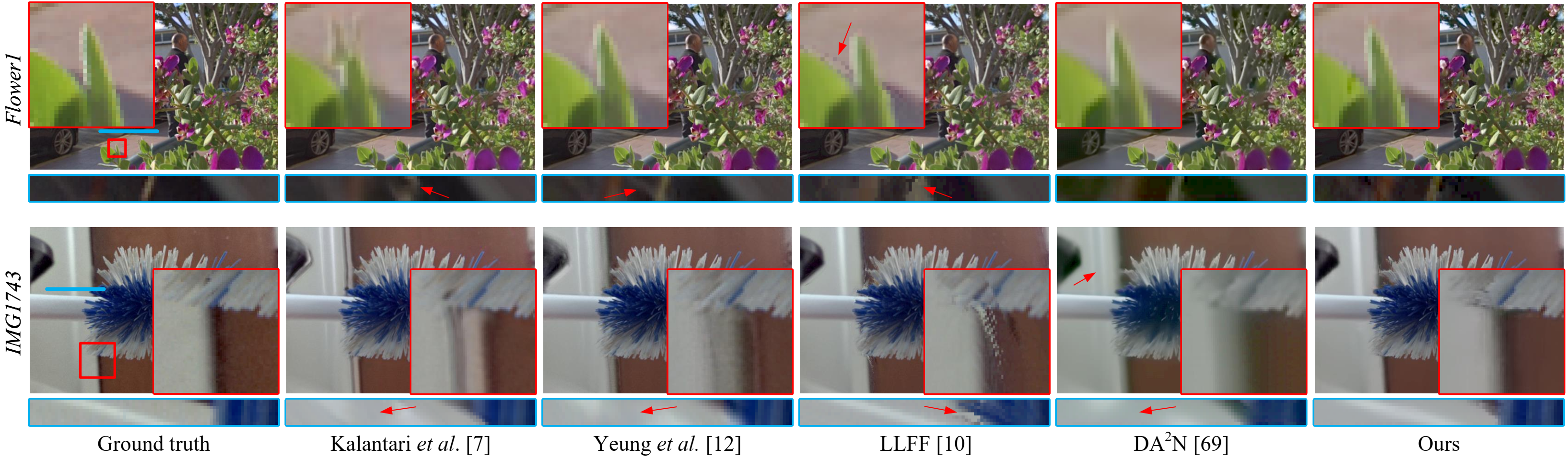

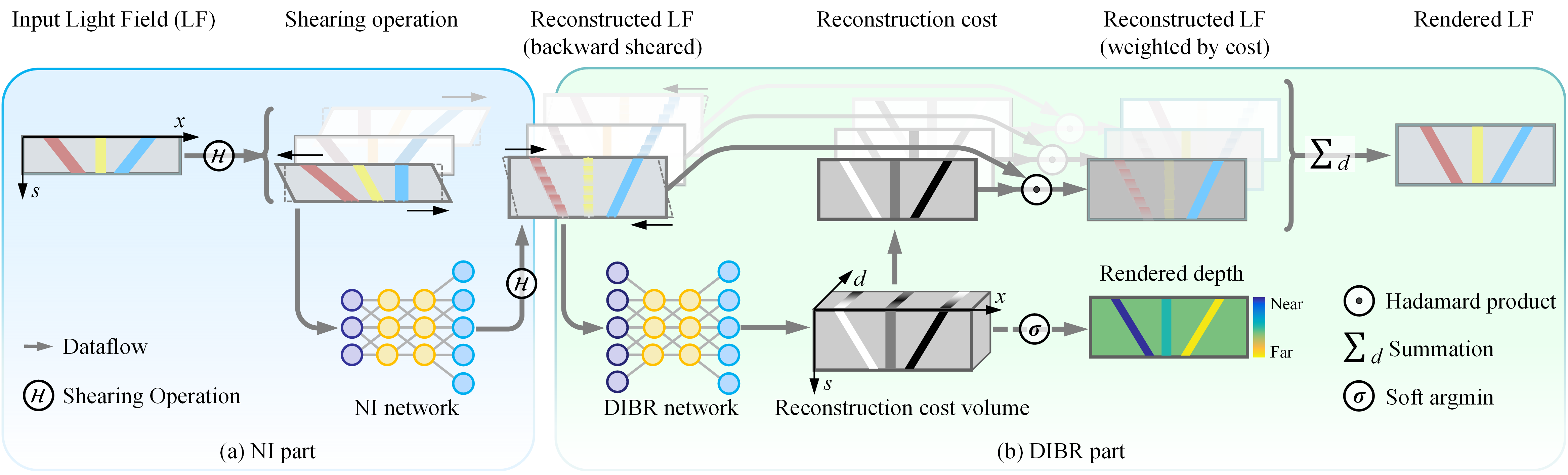

Overview: We present Geo-NI framework for geometry-aware light field rendering by launching the Neural Interpolation (NI) within a novel Depth Image-Based Rendering (DIBR) pipeline. The proposed framework is able to render LFs with large disparity while also reconstruct the non-Lambertian effects. Due to the awareness of the scene geometry, the proposed framework is able to render multi-plane image (a layered scene representation) and scene depth.

Network: The proposed Geo-NI framework is composed of two parts: (a) a Neural Interpolation (NI) part that directly reconstructs the sheared LF; and (b) a Depth Image-Based Rendering (DIBR) part that assigns a reconstruction cost map to each reconstructed LF.

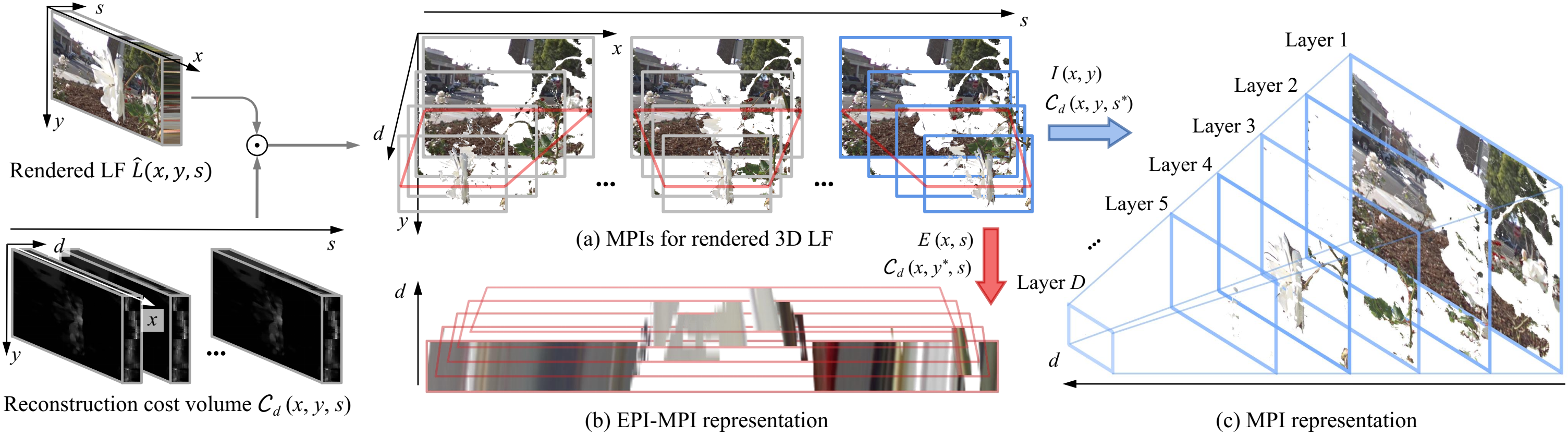

MPI representation: The reconstruction cost produced by the proposed Geo-NI framework can be interpreted as alpha in the MPI representation. (a) We can promote the rendered 3D LF to MPIs for both input views and reconstructed views. We can also convert the reconstruction cost volume to (b) the EPI-MPI representation or (c) the vanilla version of MPI representation, simply by slicing along different dimensions.